Leveraging Uniformization and Sparsity for Estimation and Computation of Continuous-Time Dynamic Discrete Choice Games

Jason R. Blevins.

The Ohio State University, Department of Economics

Working Paper.

Availability:

- Working paper | arXiv (updated November 7, 2025)

- Replication code

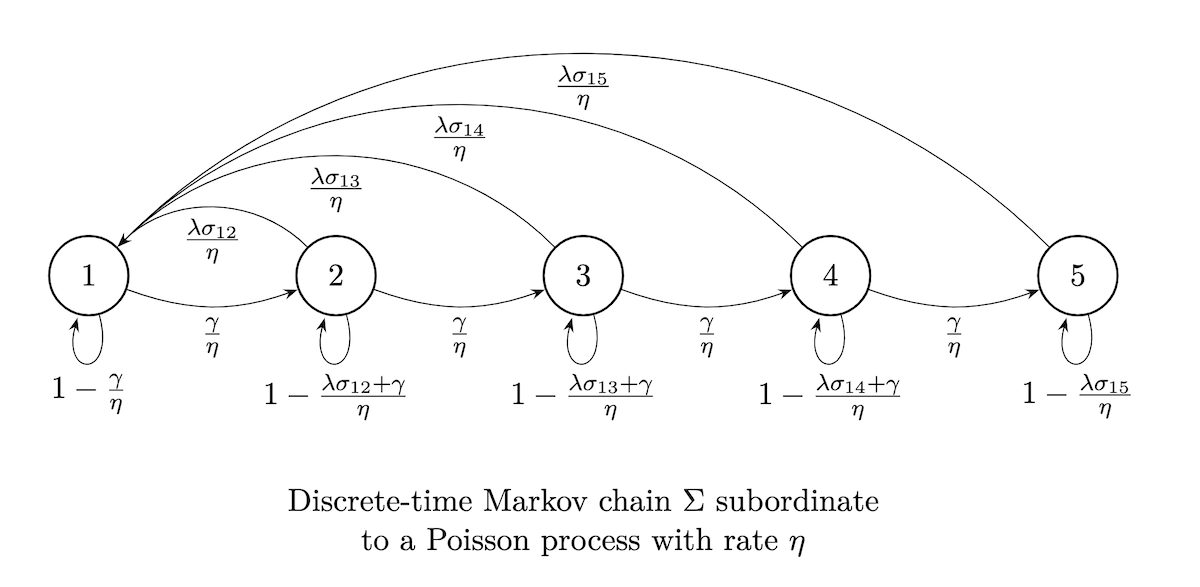

Abstract. Continuous-time empirical dynamic discrete choice games offer notable computational advantages over discrete-time models. This paper addresses remaining computational and econometric challenges to further improve both model solution and estimation. We establish convergence rates for value iteration and policy evaluation with fixed beliefs, and develop Newton-Kantorovich methods that exploit analytical Jacobians and sparse matrix structure. We apply uniformization both to derive a new representation of the value function that draws direct analogies to discrete-time models and to enable stable computation of the matrix exponential and its parameter derivatives for estimation with discrete-time snapshot data, a common but challenging data scenario. These methods provide a complete chain of analytical derivatives from the value function for a given equilibrium through the log-likelihood function, eliminating the need for numerical differentiation and improving finite-sample estimation accuracy and computational efficiency. Monte Carlo experiments demonstrate substantial gains in both statistical performance and computational efficiency, enabling researchers to estimate richer models of strategic interaction. While we focus on games, our methods extend to single-agent dynamic discrete choice and continuous-time Markov jump processes.

Keywords: Continuous time, Markov decision processes, dynamic discrete choice, dynamic stochastic games, maximum likelihood estimation, uniformization, matrix exponential, analytical derivatives, finite-sample properties.

JEL Classification: C13, C63, C73, L13.

BibTeX Record:

@TechReport{blevins-2025,

author = {Jason R. Blevins},

title = {Leveraging Uniformization and Sparsity for Estimation and

Computation of Continuous-Time Dynamic Discrete

Choice Games},

type = {Working Paper},

institution = {The Ohio State University},

year = 2025

}